In traditional machine learning and data science applications, model selection is a time-consuming process that generally requires a significant amount of statistical background. Azure Machine Learning completely breaks this paradigm. As you will see in the next few posts, model selection is Azure Machine Learning requires nothing more than a basic understanding of the problem we are trying to solve and a willingness to let the data pick our model for us. Let's take a look at our experiment so far.

|

| Experiment So Far |

|

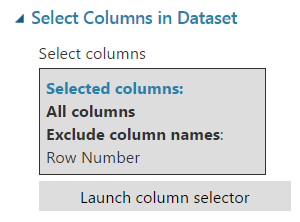

| Select Columns in Dataset |

|

| Initialize Model |

"Anomaly Detection" is the area of Machine Learning where we try to find things that look "abnormal". This is an especially difficult task because it requires defining what's "normal". Fortunately, Azure ML has some great tools that handle the hard work for us. These types of models are very useful for Fraud Detection in areas like Credit Card and Online Retail transactions, as well Fault Detection in Manufacturing. However, our training data already has fraudulent transactions labelled. Therefore, Anomaly Detection may not be what we're looking for. However, one of the great things about Data Science is that there are no right answers. Feel free to add some Anomaly Detection algorithms to the mix if you would like.

"Classification" is the area of Machine Learning where we try to determine which class a record belongs to. For instance, we can look at information about a person and attempt to determine where they are likely to buy a particular product. This technique requires that we have an initial set of data where already know the classes. This is the most commonly used type of algorithm and can be found in almost every subject area. It's not coincidence that our variable of interest in this experiment is called "Class". Since we already know whether each of these transactions was fraudulent or not, this is a prime candidate for a "Classification" algorithm.

"Clustering" is the area of Machine Learning where we try to group records together to identify which records are "similar". This is a unique technique belonging to a category of algorithms known as "Unsupervised Learning" techniques. They are unsupervised in the sense that we are not telling them what to look for. Instead, we're simply unleashing the algorithm on a data set to see what patterns it can find. This is extremely useful in Marketing where being able to identify "similar" people is important. However, it's not very useful for our situation.

"Regression" is the area of Machine Learning where try to predict a numeric value by using other attributes related to it. For instance, we can use "Regression" techniques to use information about a person to predict their salary. "Regression" has quite a bit in common with "Classification". In fact, there are quite a few algorithms that have variants for both "Classification" and "Regression". However, our experiment only wants to predict a binary (1/0) variable. Therefore, it would be inappropriate to use a "Regression" algorithm.

Now that we've decided "Classification" is the category we are looking for, let's see what algorithms are underneath it.

|

| Classification |

|

| Two-Class Classification Algorithms |

Two-Class Averaged Perceptron

Two-Class Boosted Decision Tree

Two-Class Decision Forest - Resampling: Replicate

Two-Class Decision Forest - Resampling: Bagging

Two-Class Decision Jungle - Resampling: Replicate

Two-Class Decision Jungle - Resampling: Bagging

Two-Class Locally-Deep Support Vector Machine - Normalizer: Binning

Two-Class Locally-Deep Support Vector Machine - Normalizer: Gaussian

Two-Class Locally-Deep Support Vector Machine - Normalizer: Min-Max

Two-Class Logistic Regression

Two-Class Neural Network - Normalizer: Binning

Two-Class Neural Network - Normalizer: Gaussian

Two-Class Neural Network - Normalizer: Min-Max

Two-Class Support Vector Machine

A keen observer may notice that the "Two-Class Bayes Point Machine" model was not included in this list. For some reason, this model cannot used in conjunction with "Tune Model Hyperparameters". However, we will handle this in a later post.

Hopefully, this post helped shed some light on "WHY" you would choose certain models over others. We can't stress enough that the path to success is to let the data decide which model is best, not "rules-of-thumb" or theoretical guidelines. Stay tuned for the next post, where we'll use large-scale model evaluation to pick the best possible model for our problem. Thanks for reading. We hope you found this informative.

Brad Llewellyn

Data Scientist

Valorem

@BreakingBI

www.linkedin.com/in/bradllewellyn

llewellyn.wb@gmail.com

No comments:

Post a Comment